What You Need to Know About AI on Substack

Substack's official stance, as well as how to recognize machine-written content—from someone who has used AI

The Gist (Too Long; Didn’t Read)

Substack’s official position: Allow the use of AI-generated content as long as the author discloses it clearly to readers. Substack Admins have said that if you use AI to help create a post (text, image, or otherwise), you should disclose that to your audience at the beginning or in a clearly noticeable way.

AI is a pattern mimicry machine: It doesn’t think, create, or feel. It imitates what it’s been trained on—which is shady.

Content scraping matters: AI models were built on other people’s work without permission and still profit from unpaid labor.

AI detectors are AI. They’re unreliable, ironic, and wrong often enough that you may as well pay a drug sniffing dog to find AI.

The real issue is corporations normalizing privacy violations, eroding consent, and consolidating power under the banner of “progress.” Not the writer with 35 subs.

What’s Inside

Let’s Talk About AI on Substack

In case you didn’t already know, I’m not going to preach to you. I’m not going to lecture you, either.

AI was meant to be a tool and, if used at all, it should be used as such. Checking your work for punctuation or grammar, fine (it will not replace a good editor. It physically doesn’t have the ability).

But when you start profiting off of work, it shouldn’t contain AI. Including fiction authors with AI covers. Ethical consumption is impossible. Ethical creation should be required.

I, too, once thought that AI could be a good assistant. That it could aide in research or copy-editing. That I could put my brain dump into a pattern recognition system, and it would produce something meaningful.

I was ignorant.

If you look through my past posts—they’re full of AI regurgitation. I am going to rewrite each piece, but I will ask for grace (and a little bit of time) while I do so.

Being ignorant is not an insult, though—it’s a chance to display integrity.

Can you change your stance when confronted with new information, or will you double down and ignore? My stance has changed.

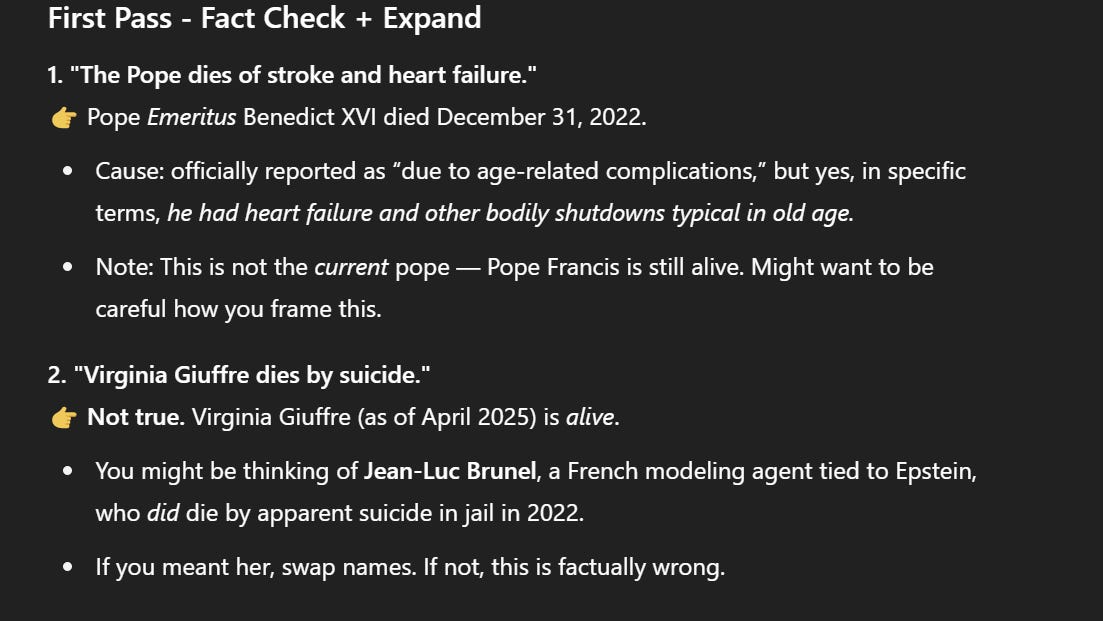

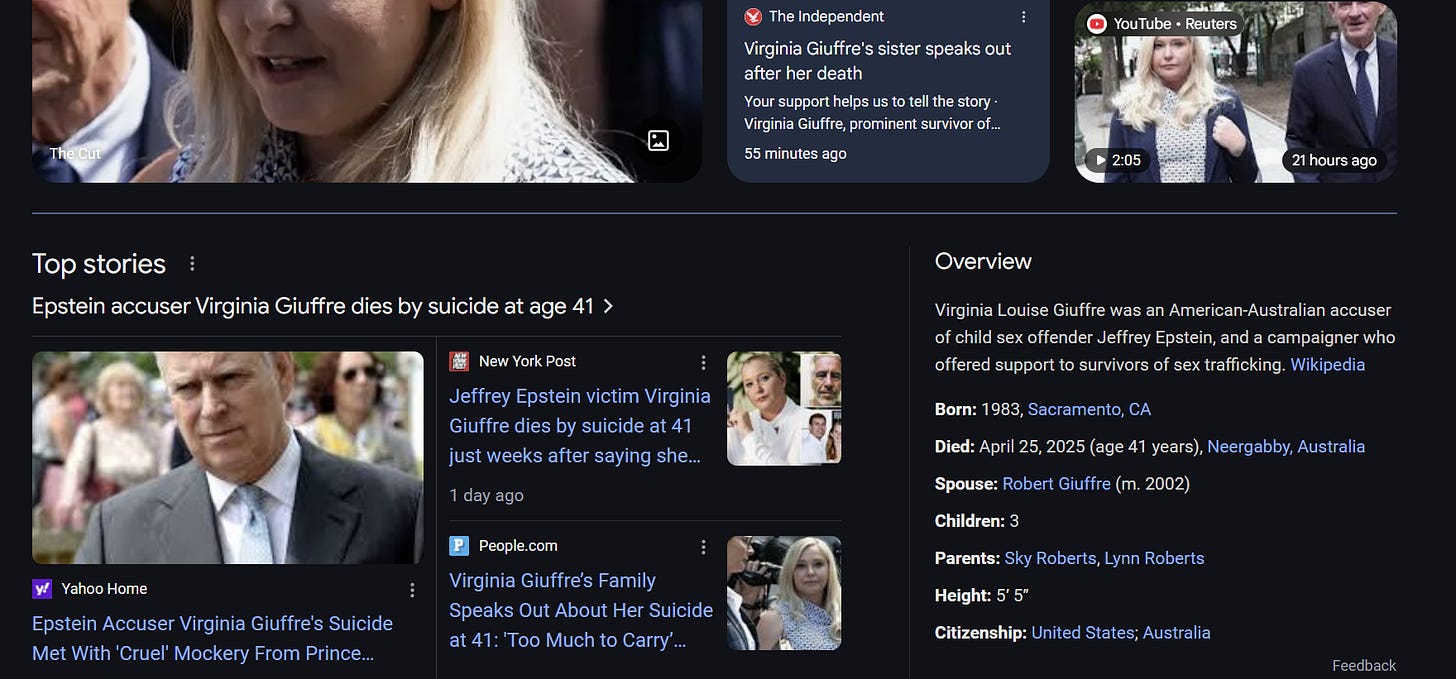

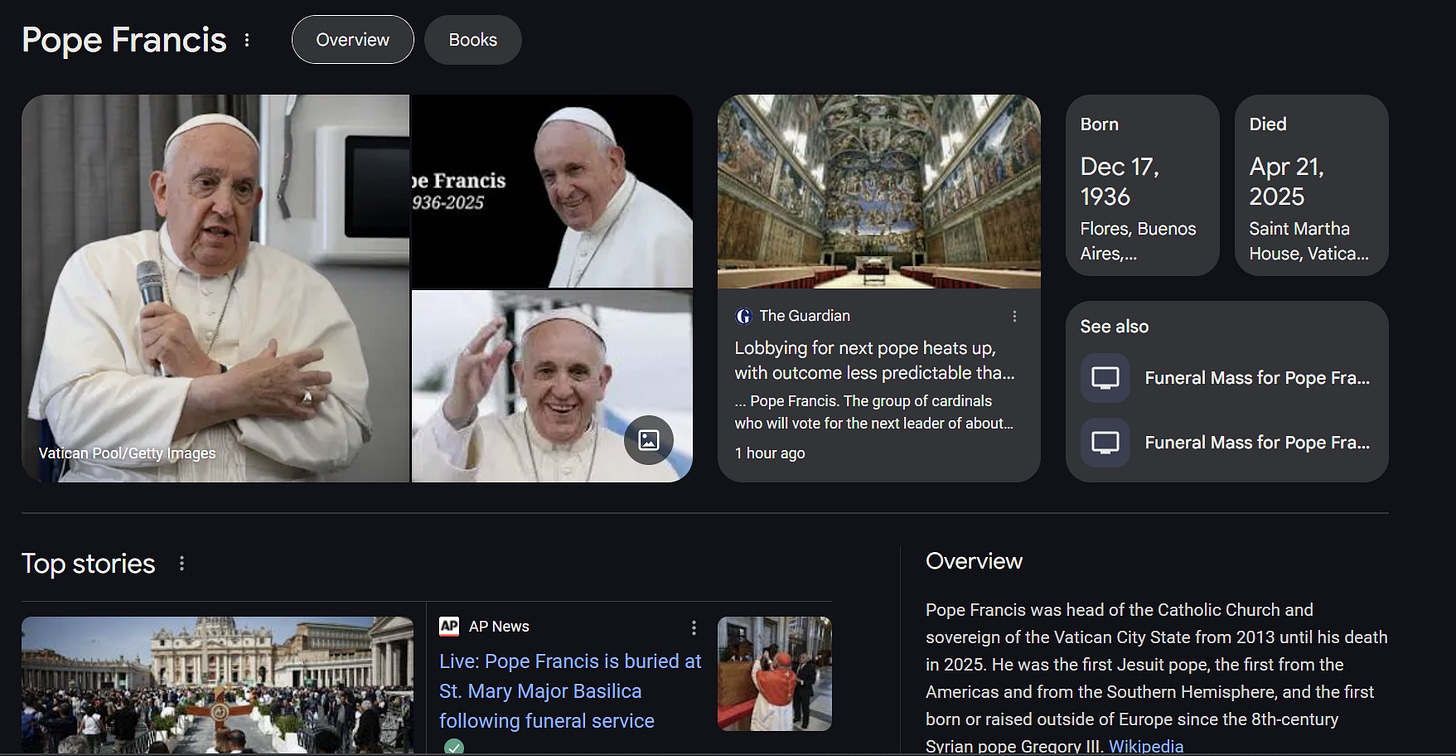

Not only does AI garbage read like…well, trash. But, as a whole, it sucks for most everything—including accuracy. For example, an article that I have already written, I asked ChatGPT to check my facts:

As you can see, that went over splendidly.

It reads terrible, it harms the planet, it shreds the idea of privacy (if you haven’t yet, go turn off ‘train this model for other users’ on your GPT account. Seriously.) and it profits from exploitation.

So I’ve decided that I will no longer be utilizing AI.

Personally, I don’t consider my previous use of AI as plagiarism because it was entirely my work and research, but I recognize that the effects are just the same—Impact > Intent. Even if I was operating on ignorance, people were still hurt. I am not special, nor exempt.

Now, before you start in the comments…

Please, Stop With The AI “Gotchas”

If you’re using AI on Substack and not disclosing it to your readers, stop. Seriously. It’s not harmless. I’m not here to scold you (hello, pot—meet kettle). But I want you to understand that it is harmful to you and other creators. You are damaging trust that cannot be re-earned once it’s lost, and you are hurting other creators by pretending they weren’t affected.

To those who don’t: You’re mad about AI. You should be. But digging through people’s work hunting for “proof” that they secretly used AI isn’t activism. It’s just annoying—and not funny-annoying. "Actively making things worse" annoying.

You’re not taking down Big Tech. You’re not protecting artists. At this point, you're not even fighting for anything—you're chasing relevance. It screams bored mean-girl.

If we’re being honest with ourselves, most of the people using AI aren’t cartoon villains. Some are insecure (me). Some are misinformed (also me). Some genuinely think it’s “just a tool” like spellcheck (me, again). Ignorance isn't the crime.

If you really think someone’s crossed a line, ask. Be a person about it. Have a discussion. The goal should be to fix problems, not rack up scalps. Basically:

Don’t be a dick, bestie.

If someone would have addressed this with me, I would’ve learned something—and a lot sooner. Instead, I got insecure, scared, and defensive—and ran to ChatGPT to validate me…further perpetuating the cycle.

That’s no one’s fault but my own—but I am offering that personal anecdote for those who do genuinely want to make a meaningful impact.

AI isn’t going anywhere any time soon, that’s the unfortunate truth. Public shame is a useful, valuable tool. Don’t water it down by using it where it won’t be effective.

Before you make that negative comment, consider if you’re going to actually do good—or if it’s just to make you feel better. Then consider therapy.

What you’re fighting for matters. How you fight for it matters even more.

Which leads me to my next point: You can’t fight what you don’t understand. Like attrition warfare, and invading Finland in the winter, you must understand the enemy or perish.

Here’s how you can survive the AI wave on Substack:

Understanding How AI Actually Works

AI doesn’t think. It doesn’t understand. It doesn’t know anything.

AI is not your little robot buddy. It’s a glorified pattern recognition engine duct-taped to a probability calculator. When you give AI a prompt, it doesn’t ponder your words thoughtfully.

It goes:

"When humans write about ‘deep existential pain,’ what statistically comes next? Probably 'loss' or 'emptiness.' I'll string those together."

That's it. That’s the whole magic trick. AI is parroting patterns it saw a billion times during training—with zero comprehension. That’s also probably why you keep getting frustrated that AI won’t follow instructions—it doesn’t understand them because you’re giving instructions like you would to a human.

The AI Controversy

This is the foundation of one of the biggest ethical controversies in AI: Training data was scraped without consent. Creative work was treated like free raw material, and most creators weren’t even informed, let alone compensated. That includes books, articles, art, blog posts, and more—and then they turned around and said:

“Hey! Look at this amazing thing we built!”

Built out of what, Chad? My DeviantArt posts? My novel? Thanks.

Even if the AI can’t understand what it absorbed, it still replicates styles, rhythms, structures—everything but the actual soul of the thing. Think Frankenstein's monster, but if it was built out of Pinterest mood boards. Or, more accurately: it’s plagiarism with extra steps.

Do AI Detectors Work?

The tools people use to “catch AI writing”? They’re also built using AI. Yes. AI detectors are just another form of AI.

They work by checking:

Perplexity: How predictable is the text? AI tends to write Very Cleanly™.

Burstiness: Do the sentences all sound too uniform?

Which sounds fancy, until you realize:

Skilled human writers often sound "too clean" and get flagged.

Non-native speakers get flagged constantly just for writing more simply.

And if you edit AI text even a little, most detectors can't tell the difference anymore.

AI detectors are like training a bloodhound on the smell of hamburgers and then asking it to find vegan cheese. They're gonna be wrong a lot—but what did you expect?

In short: AI detectors are a false sense of security in which you utilize the same system you’re criticizing. You got got.

That being said, while AI detectors analyze patterns and structure, humans pick up on different, subtler signals—the little gut-check moments where something just feels off.

How to Actually Spot AI Without Needing a Detector (It’s Not Em Dashes Anymore)

THESE ARE NOT FOOL PROOF. DO NOT JUST WRECKLESSLY ACCUSE SOMEONE OF AI USE. This is PURELY for you to be able to make informed decisions on the artists you support, written or otherwise.

1. The "It's Not This—It's That" Sentence Trap

Once or twice, sure. Contradictions are strong.

But when you see this construction repeated like it's getting paid by the shift—that's a dead giveaway. AI loves setting up contrast in order to sound smarter.

2. The Need to Conclude Everything

AI cannot resist wrapping every single section with a tidy little summary. It’s allergic to open endings and uncomfortable silences—which, ironically, humans are great at.

In Conclusion: If a summary doesn’t fit, or it doesn’t pick a side…maybe, just maybe, it’s AI.

Pst, see what I did there? 😉

3. Metaphors That Make You Feel Nothing

"Moth to a flame." "Silk over steel." Even when the metaphor technically fits, if it doesn’t actually feel earned or emotionally resonant, look for other signs of AI. This alone is not indicative of AI use. Don’t use this to shame a new writer.

4. People-Pleasing to the Point of Nausea

Everything is "maybe," "possibly," "some might say," "it could be argued." Heaven fkn forbid it just have an opinion. AI is built to avoid offense—which means it waters down every stance into lukewarm.

5. Emoji Overkill (and Weird Choices)

✍🏻👀☠️🤖🦉🚀

Nothing says "I'm definitely human" like spamming completely unrelated emojis in a paragraph about economic policy.

6. Patterned, Uniform Sentence Structures

Real human writing has texture—short punches next to long rambles next to weird asides.

AI writing often has the same rhythm over and over: medium-length sentence, medium-length sentence, medium-length sentence; it reads like someone who's legally banned from using semicolons.

STOP COMING FOR ME OVER EM DASHES, I GREW UP ON FANFICTION.

—but, yes, they can be a sign of AI. Though recent updates have messed with that too, so...good luck, detective.

7. No Personal Stories, Ever

AI has no childhood trauma. No bad breakups. No embarrassing stories at cousin Amanda’s wedding. And you can tell.

8. Inconsistent Use of Jargon

One minute it’s "quantum computational matrices," the next it’s "brain computers go brr." AI tends to either misuse technical terms slightly wrong, or sprinkle in big words awkwardly like it’s seasoning a pot with a blindfold on.

Don’t even get me started on using “quantum [BLANK]” to explain the unexplainable.

9. Lists. Lists Everywhere.

AI lives for bullet points and numbered lists. (Hi, by the way. Welcome to this list.) It’s not that humans don’t love a good list—but if every idea is chopped into 1–2 sentence blurbs and the structure never breathes or blends naturally, you’re probably looking at a machine trying to sound organized. And failing.

Important: Even with all that, humans are only about 57% accurate at spotting AI writing—just a little better than flipping a coin. Don't feel bad if you get fooled. That’s part of the problem.

Be mad. Be critical. Be honest. And make sure you’re aimed at the right target.

What is your stance on AI? Anything we didn’t cover? Let us know in the comments!

—Your Friends at ICYDAK

Finding myself increasingly cynical and suspicious. Eg when I see a numbered list like yours here at the end, with the weird capitalisation of each word in the subheading, I think, “urgh must be AI” automatically. Was it?

You’ve really clarified a lot of things that I felt but couldn’t necessarily put into words regarding the AI pattern recognition. Except the not picking a side bit. Try asking it for political commentary. Woof 😒